Last Updated on September 22, 2022 by Jay

This tutorial will walk through how to run the Stable Diffusion software on Windows computer for free. Stable Diffusion is an artificial intelligence software that creates images from text. This is a totally awesome software because from now on, I can create my own images for the blog articles!

Take a look at what the software can generate just using the default settings:

Art title: “final fantasy, futuristic city, realistic, 8k highly detailed, hyperdetailed, digital art, matte”

by Jay’s Comptuer (and Stable Diffusion)

This software is mostly written in Python, so that’s another good reason for me to take look. The project’s official github page is here: https://github.com/CompVis/stable-diffusion

Hardware Requirements

As of Sept 2, 2022, Stable Diffusion:

- Can only run on Nvidia GPU (graphic card), and it doesn’t work on AMD.

- The minimum video RAM required is 4 GB, for this, we’ll need to use an “optimized” version.

Follow these steps to download and run Stable Difussion on a Windows computer.

Download The Model Weights

The model weights are several gigabytes so let’s start with downloading them first. The latest model weights is version 1-4, and can be found here: https://huggingface.co/CompVis/stable-diffusion-v-1-4-original

You’ll need to register an account at Hugging Face and log in to gain access. While this is downloading, let’s set up other things.

Download the Stable Diffusion Source Code

There are two versions of the source code, and we’ll want to get a copy of both.

Official Version (not optimized)

Stable Diffusion is an open source software and written in Python. The official github project page is here: https://github.com/CompVis/stable-diffusion. Feel free to grab a copy of the code.

Optimized Version

The official version requires a high-end graphic card with at least 10GB VRAM to generate a 512 x 512 image at default settings. Someone named Basu created an “optimized” version that will allow running the software on a 4GB VRAM graphic card. We can get the optimized version here: https://github.com/basujindal/stable-diffusion. Thanks, Basu!

From the optimized version, we only need files from the folder “optimizedSD”, what I did was copy this folder and put it inside the official version.

Now we have the source code, let’s get Python.

Install Anaconda & Set Up Environment

Maybe you are very used to using pip for installation, I get it (I’m the same). However, using the Anaconda Distribution will make it so much easier, so I highly recommend getting Anaconda first, then setting up the environment. I will explain why very soon.

Go to the Anaconda official website https://www.anaconda.com, and download and install Anaconda. If your Anaconda doesn’t launch properly after the installation, refer to this guide to fix the problem: How to Fix Anaconda Doesn’t Launch Issue.

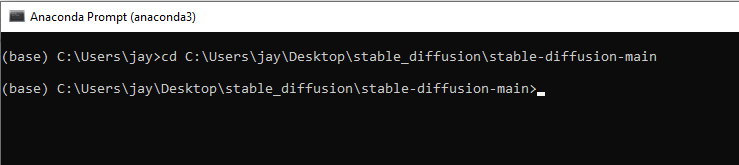

After installing Anaconda, open the Anaconda Prompt, and navigate to the folder that contains the source code.

This folder contains an “environment.yaml” file, which is like a configuration file for building the Python (conda) environments. This is similar to the “requirements.txt” for pip. feel free to open up the file (in text editor) and see what dependencies are needed for running Stable Diffusion.

In the Anaconda Promot, type the following, which will first create a conda environment (download and install all required dependencies), and then activate it.

conda env create -f environment.yaml

conda activate ldmThe above command will also install CUDA, which is a software developed by Nvidia that allows other software to directly control the graphic card for parallel computation. CUDA is not a Python library and not on the Pypi index. This is the reason we don’t want to use pip to set the environment – because we’ll have to jump through a few hoops.

One Last Step – Model Weights

By now, the model weight we downloaded at the beginning is probably finished. We will need to move it to a specific location.

Go to this folder first: \stable-diffusion-main\models\ldm

Then create a new folder, name it stable-diffusion-v1. This folder did not exist when we first downloaded the code.

Move the downloaded weight file sd-v1-4.ckpt into this new folder we just created, then rename the weight file to model.ckpt. It should look like this after you complete the above steps:

Start Creating Art!

Now the setup is finished, and we are ready to run Stable Diffusion on our Windows computer! Inside the same Anaconda Prompt, type the following command:

python scripts/txt2img.py --prompt "beautiful portrait, hyperdetailed, art by Yoshitaka Amano, 8k highly detailed" --plms Note the string following –prompt is the text input describing what we want for the image. Feel free to try whatever is creative here. Each time we run the software, Stable Diffusion will give random results. Here are a few examples of what I got from the above prompt (in the code):

Wait, The Program Crashed??

Yes, without tweaking, you’ll need a very high-end (beyond consumer grade) graphic card to run this program. Below are two ways to run this program without crashing

If You Have A Good Graphic Card (RTX 3080+)

The bad news is the official version (un-optimized) still does not run on a 3080 card. I’m not sure about 3090 and above though. You might get an “out of memory” error. Yes, it can go out of memory on a 10GB VRAM card…

With this kind of GPU, we have two options.

Option 1: Minor adjustment in the txt2img.py file

Add model.half() inside the function load_model_from_config(). Something like the one below. This will significantly reduce the VRAM usage.

With this change, I was able to generate images using an RTX 3080, almost maxing out the VRAM.

Option 2: Use the optimized Stable Diffusion version

This version will allow us to run Stable Diffusion with a 4GB graphic card. We simply need to run another Python script inside the optimizedSD folder, which will look like the following. Note this method will take longer time to run because it takes less VRAM.

python optimizedSD\optimized_txt2img.py --prompt "beautiful portrait, hyperdetailed, art by Yoshitaka Amano, 8k highly detailed"If You Have A Lower End Graphic Card (RTX 2000s and below)

For a 4GB VRAM graphic card, the best option is to use the “optimized” version. See Option 2 above.

Enjoy creating your art!

When installing, i run into problems installing dlib because cmake is not installed. Installing cmake still generates the issue. Ive read multiple guides on installing SD on windows and none of them mention the issues with cmake and dlib. Ive been googleing and havent found a solution. Any suggestions?

Did you use the conda env create command for the installation? I didn’t have the cmake problem on both of my PCs.

There are many ways to install SD, maybe you can try another one if the approach in the guide doesn’t work out.

Hey, first of all, thank you a lot for this tutorial!

I have a question, when trying to install the dependencies from the environment.yaml file, it failed with an error saying that i don’t have GIT.

Then, I installed git with conda install git.

I tried to re-run the command conda env create -f environment.yaml, but it won’t let me!

Do you have any ideas how to resume the installation of those dependencies?

Thanks

No problem and glad I was able to help.

I had similar issue with git during one of my installations too, and below is what I posted on YouTube to answer someone else who had the same problem:

These steps helped fix the problem:

1. Download and install Git (https://git-scm.com/download/win). This is a version control software used by many so it’s safe to use. People also use it to download packages/code from Github with the “git clone” command. That’s what you might be missing.

2. In Anaconda Prompt, type “conda env remove -n ldm” to remove the previously unfinished environment.

3. Delete the ldm folder from your conda installation folder, for me it’s “C:\Users\jay\anaconda3\envs”, you’ll want to replace the user name when trying on your computer.

4. Once done all the above, restart the Anaconda Prompt, just so the Git installation can register properly with the conda prompt. then cd to the appropriate folder, and then type “conda env create -f environment.yaml” again

Thanks for this tutorial.

Being connected behind a proxy, I had some issues with the git (Command errored out with exit status 128: git clone, Pip failed ) . This was solved using :

git config –global http.proxy http:// “yourproxy”:”yourproxyport”

Though the text2img image generation is rather straight forward, I am wondering how you can get access to intermediate results (produced latents before decoder-based image generation).

Would also be interested to get the decoder-based latents of a peculiar image. Any python commands you would recommend ?

ThanXXX